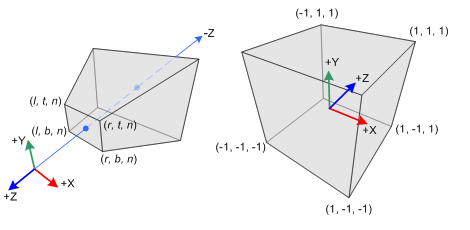

The canonical view volume is a cube with its extreme points at

Some points expressed in view space won’t be part of the view volume and will be discarded after the transformation, this process is called clipping (we only need to check if any coordinate of a point is outside the range

Later it’ll be seen that both transformations imply division and a neat trick is the use of projective geometry to avoid division, any point that has the form

Orthographic projection

An orthographic projection matrix is built with 6 parameters

- left, right: planes in the

- bottom, top: planes in the

- near, far: planes in the

These parameters bound the view volume which is an axis-aligned bounding box

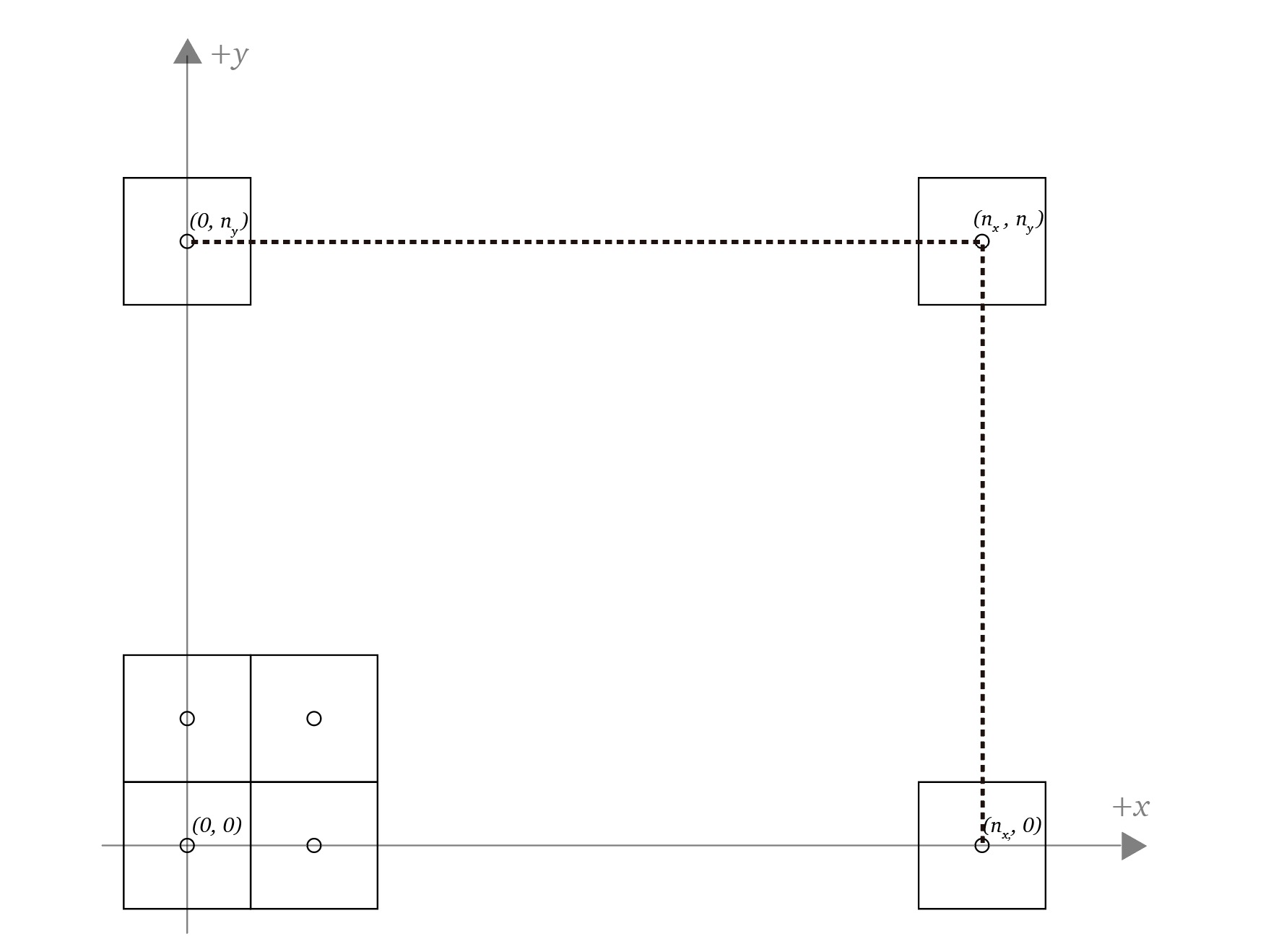

Ortographic Projection

Since the mapping of the range

Finally

We can adapt

The

The

The transformation matrix from view space to clip space is

Finally note that

Building the matrix using combined transformations

A simpler way to think about this orthographic projection transformation is by splitting it in three steps

- translation of the bottom left near corner to the origin i.e.

- scale it to be a 2-unit length cube

- translation of the bottom left corner from the origin i.e.

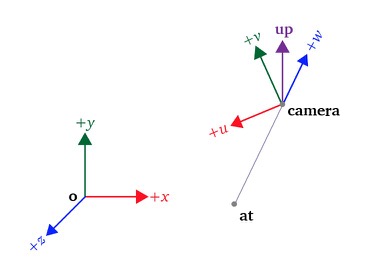

Perspective projection

Projective geometry concepts are used in this type of projection, particularly the fact that objects away from the point of view appear smaller after projection, this type of projection mimics how we perceive objects in reality

A perspective projection matrix is built with 6 parameters, left, right, bottom, top, near, far

- left, right:

- bottom, top:

- near, far: planes in the

These parameters define a truncated pyramid also called a frustum

Perspective projection

General perspective projection matrix

The mapping of the range

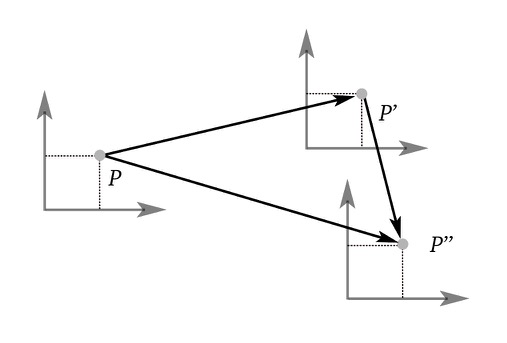

- Project all the points to the near plane, this way all the

- Map all the values in the range

Top view of the frustum

Side view of the frustum

Let

Note that both quantities are inversely proportional to

The point in homogeneous coordinates is

OpenGL will then project any 4D homogeneous coordinate to the 3D hyperplane

We can take advantage of this process and use

Where

Next

Next we substitute the values of

Note that the second fraction is manipulated so that it’s also divisible by

Similarly the value of

Then the transformation matrix seen in

Next we need to find the value of

Since

Then

Since

Note that the value is not linear but it needs to be mapped to

Subtracting the second equation from the first

Solving for

Substituting the values of

Symmetric perspective projection matrix

If the viewing volume is symmetric i.e.

Then

Symmetric perspective projection matrix from field of view/aspect

gluPerspective receives instead of the

- field of view (

- aspect (

fov

We see that the value of

We can find the value of

Substituting