iterations, batch, batch size and epoch

- A batch is the set of examples used in one iteration, the number of examples in the set is the batch size.

- For example, the batch size of SGD is 1, while the batch size of a mini-batch is usually between 10 and 1000. Batch size is usually fixed during training and inference; however, TensorFlow does permit dynamic batch sizes.

- Each iteration is the span in which the system processes one batch of size batch size.

- An epoch spans spans sufficient iterations to process every example in the dataset i.e. an epoch represents $\frac{N}{batchSize}$ training iterations where $N$ is the number of samples.

Batch, batch size, epoch

k-fold cross validation

From https://www.analyticsvidhya.com/blog/2018/05/improve-model-performance-cross-validation-in-python-r/

- Randomly split your entire dataset into k “folds”

- For each k-fold in your dataset, build your model on k – 1 folds of the dataset. Then, test the model to check the effectiveness for kth fold

- Record the error you see on each of the predictions

- Repeat this until each of the k-folds has served as the test set

- The average of your k recorded errors is called the cross-validation error and will serve as your performance metric for the model

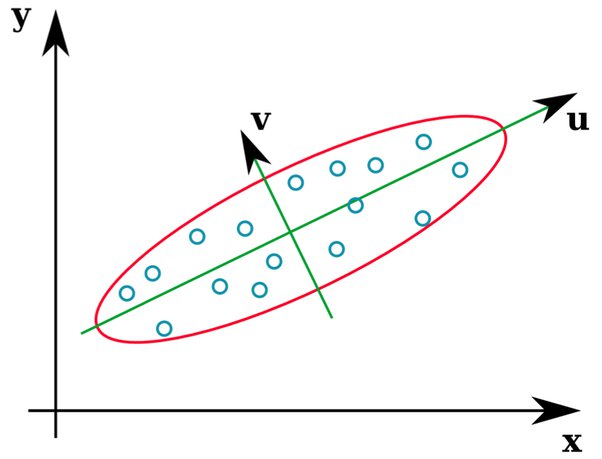

feature extraction

Merge several correlated features into one. Also see dimensionality reduction

sampling noise/bias

Sampling noise: nonrepresentative sample data as result of chance (typically when the sample is too small) Sampling bias: nonrepresentative sample data as result of a flaw in the sampling method